Infrastructure Notes: Azure Storage Overview

Basics

Microsoft’s cloud storage solution. ‘Massively’ scalable object store:

- data objects

- file system service for the cloud

- messaging store for reliable messaging

- NoSQL store

All data written to Azure Storage is encrypted by the service.

Acces via HTTP or HTTPS.

API:

Microsoft provides client libraries for .NET, Java, Node.js, Python, PHP, Ruby, Go and a mature REST API. (Probably more languages, changes frequently, but the REST API gives you the option to work with whatever language you like).

Key data services:

Azure Blobs: Scalable object store for text and binary data. Azure Files: Managed file shares for cloud or on-premises deployments. Azure Queues: A messaging store for reliable messaging between application components. Azure Tables: A NoSQL store for schema-less storage of structured data. (Azure Table storage is now part of Azure Cosmos DB) Disk storage: An Azure managed disk is a virtual hard disk (VHD). It’s called a ‘managed’ disk because it is an abstraction over page blobs, blob containers, and Azure storage accounts

‘Azure Files’ is interesting:

- Highly available network file shares that can be accessed by using the standard Server Message Block (SMB) protocol.

- One thing that distinguishes Azure Files from files on a corporate file share is that you can access the files from anywhere in the world using a URL that points to the file and includes a shared access signature (SAS) token

- You can generate SAS tokens; they allow specific access to a private asset for a specific amount of time

- At this time, Active Directory-based authentication and access control lists (ACLs) are not supported

- The storage account credentials are used to provide authentication for access to the file share. This means anybody with the share mounted will have full read/write access to the share

Authentication for Storage Accounts

- Azure Active Directory (Azure AD) integration for blob and queue data (recommended)

- Azure AD authorization over SMB for Azure Files (preview / Azure AD Auth)

- Authorization with Shared Key

- Authorization using shared access signatures (SAS - string containing a security token that can be appended to the URI for a storage resource. The security token encapsulates constraints such as permissions and the interval of access)

- Anonymous access to containers and blobs

Encryption

- Provided transparently by the service for encryption at rest. Own keys can be set up using azure key vault if needed.

- Client side is offered, when the data can be encrypted before being sent over the wire. Implemented in the client libraries and supported by Azure Storage.

Redundancy

- Locally-redundant storage (LRS): low-cost replication. Data is replicated synchronously three times within the primary region.

- Zone-redundant storage (ZRS): high availability. Data is replicated synchronously across three Azure availability zones in the primary region.

- Geo-redundant storage (GRS): Cross-regional replication. Data is replicated synchronously three times in the primary region, then replicated asynchronously to the secondary region. For read access to data in the secondary region, enable read-access geo-redundant storage (RA-GRS).

- Geo-zone-redundant storage (GZRS) (in preview): Replication for scenarios requiring both high availability and maximum durability. Data is replicated synchronously across three Azure availability zones in the primary region, then replicated asynchronously to the secondary region. For read access to data in the secondary region, enable read-access geo-zone-redundant storage (RA-GZRS).

Working with it (Az CLI)

(If you dont already have the AZ CLI installed you can just do: Invoke-WebRequest -Uri https://aka.ms/installazurecliwindows -OutFile .\AzureCLI.msi; Start-Process msiexec.exe -Wait -ArgumentList '/I AzureCLI.msi /quiet'

)

Worth noting that if az login returns multiple accounts you need to make sure that you are targeting the right account with everything else you are trying to do. For example, if i execute az resource list, it would be empty by default. I need to target the right account with:

az account set -s {id}

(Id can be found by inspecting the az login return information).

Then, we can create a storage account with:

az storage account create --name dbsecstorageaccount --resource-group TestingThings --kind StorageV2 --access-tier Hot

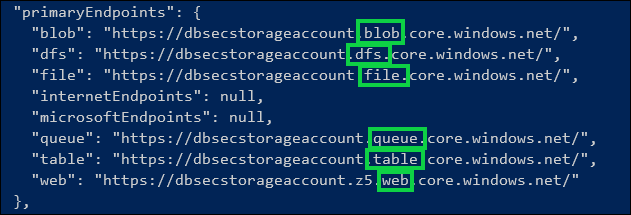

Here’s what i found interesting while trying to understand this stuff: Creating that “Storage Account” went ahead and created all the endpoint types mentioned above. It’s on me to either get more granular in what i ask for, or make configuration changes as needed.

We can see that by default, the web endpoint is up - i just have nothing in it.

There’s no real surprises here. It’s well documented, and also the reason that the name you use for the account needs to be globally unique - because Azure is going to pre-register all the endpoints for you. But, it’s definitely something that you could get caught out with.

The primary setting allowing this is in the firewalls and networks section:

It’s really simple to lock down the networks that can access the endpoints, either by IP range, or by predefined networks you’ve configure in Azure.

Storage Explorer

If you are looking for tools to make it easy to work with Storage Accounts as if they were traditional file servers, download the storage explorer

Azcopy

Tool for copying files around with Azure. It’s also able to copy from AWS. (Don’t forget to assign the ‘Storage Blob Data Contributor’ role to the account doing the azcopying. MSFT have done a good job separating ‘data’ access from administrative access for this role)

Login:

.\azcopy.exe login

Then, assuming our storage account name is “dbsectest” we’d use:

./azcopy make "https://dbsectest.blob.core.windows.net/testcontainer"

to create a new container.

Then:

.\azcopy copy "C:\tmp\Biztalk Server\*" "https://dbsectest.blob.core.windows.net/testcontainer" --recursive

If that’s working, it will look like this:

PS C:\azcopy> .\azcopy copy "C:\tmp\Biztalk Server\*" "https://dbsectest.blob.core.windows.net/testcontainer" --recursive INFO: Scanning...

INFO: Using OAuth token for authentication.

Job ed1e4c7b-bb1e-1e4f-6922-01af2742dd9f has started

Log file is located at: C:\Users\ChadDuffey\.azcopy\ed1e4c7b-bb1e-1e4f-6922-01af2742dd9f.log

9.1 %, 3 Done, 0 Failed, 15 Pending, 0 Skipped, 18 Total, 2-sec Throughput (Mb/s): 4.9806

and in the Azure portal, its going to look like this:

Theres more copy operations than you’d expect supported including AWS and Azure to Azure. But the interesting thing is that there are more authorization options - Service Principal, Client Secret and Certificates have support. This makes azcopy work well for automated tasks and scripts. The documentation even suggests using wget or equivalent to always pull the latest version of azcopy at the top of the script - neat.

More detail here.

Create

Shared Access Signature

Simple example, but if i wanted to temporarily share access to content with someone and also be able to define CRUD rights, this is the way to roll. (Note that this would usually be done via code, as a part of a workflow, but this demonstrates the power of the feature)

With this, i can provide safe, time bound access to a resource in my storage account without managing identities. Similar to Dropbox shared links. Seems to be lots of opportunity to build sharing services for an organization with this feature.

Connect to VM

Its also possible to have the storage explorer generate a net share connection command to allow Azure IaaS machines to connect to the storage account (assuming permissions are set correctly). Just right click the share and ask it to “connect file share to VM”

net use [drive letter] \\dbsecstorageaccount.file.core.windows.net\test /u:dbsecstorageaccount sdDqg3PJOsdsrcN/hnLW7tVANZRcODlfdsdfsdzsdfO+FP6DywEqQZo/2gb0QYuspSNW32sg56jog==